Can science make you good?

Of course it can’t, some will be quick to say—no more than repairing cars or editing literary journals can. Why should we think that science has any special capacity for moral uplift, or that scientists—by virtue of the particular job they do, or what they know, or the way in which they know it—are morally superior to other sorts of people? It is an odd question, maybe even an illogical one. Everybody knows that the prescriptive world of ought—the moral or the good—belongs to a different domain than the descriptive world of is.

This dismissal may capture the way many of us now think about the question, if indeed we think about it at all. But there are several reasons why it may be too quick.

First, there are different ways of understanding the question, and different modern sensibilities follow from the different senses such a question might have. Some ways of understanding it do lead to the glib dismissal, but other ways powerfully link science to moral matters. Here are just a few of the ways we might think about the relationship between science and virtue, about whether aspects of science have the power to make us good:

• Is there something about what scientists know that makes them better people than the normal run of humankind? Are different sorts of scientists—physicists, mathematicians, engineers, biologists, sociologists—more or less virtuous? And do some sorts of scientific expertise count as moral expertise?

• Are scientists recruited from a section of humankind that is already better than the norm?

• Is there something scientists know that, were it widely shared with non-scientists, would make the rest of us better? Or is there something about how scientists come to their knowledge—call it the scientific method—that would make the practices of non-scientists better, were they to master it? Would wide application of the scientist’s way of knowing make our society fairer, more just and flourishing?

• Is there something about scientists that qualifies them to intervene in social and political affairs and make decisions about all sorts of things, including, but not confined to, the social uses of their knowledge? Is a philosopher-king, or a scientist-politician, an anomaly, an absurdity, or a highly desirable state of affairs? Would a world governed by scientists be not only more rational but also more just?

The ideas and feelings informing the tendency to separate science from morality do not go back forever. Underwriting it is a sensibility close to the heart of the modern cultural order, brought into being by some of the most powerful modernity-making forces. There was a time—not long ago, in historical terms—when a different “of course” prevailed: of course science can make you good. It should, and it does.

A detour through this past culture can give us a deeper appreciation of what is involved in the changing relationship between knowing about the world and knowing what is right. Much is at stake. Shifting attitudes toward this relationship between is and ought explain much of our age’s characteristic uncertainty about authority: about whom to trust and what to believe.

It is rarely a bad idea to start with the Greeks.

“All men by nature desire to know” is the first sentence of Aristotle’s Metaphysics. The drive for knowledge, from this point of view, marks what it is to be human, making people both happy and good.

This notion went out of fashion with early Christianity, when curiosity became a vice, related to pride. But Protestantism had a more favorable view of knowledge than did Catholicism. The Protestants studied by the German sociologist Max Weber wanted to know, in particular, whether they were saved or damned, but the seventeenth-century English Puritans studied by the American sociologist Robert Merton wanted to know about the natural world.

Natural theology rendered science moral. It was a powerful cultural form.

There were several reasons why the Puritans thought the human desire for knowledge of the world fulfilled a religious duty. One was that the human body was God’s temple: God endowed it with its capacities, with the divine intention that they be used. Because the God-given faculties of reason and observation lifted us above the beasts, making us a little like the angels, their use should not be restricted. There could not be such a thing as too much knowledge, since our capacity to know whatever we could know was a divine gift. That drive to knowledge—a religious drive—could be directed anywhere: anything that one could legitimately know, one should know. But it was understood with special religious force when the object of knowledge was nature—that is, when one was doing observational or experimental science.

The trope that expressed this attitude best was the Book of Nature, the second of the two books written by God to make His attributes and intentions accessible to man. (The first book, of course, was Scripture.) The figure appears, possibly for the first time, with Saint Augustine in the fourth century. It endures throughout the Middle Ages, but it acquires new and powerful meaning in the seventeenth and eighteenth centuries, when it is invoked by many writers on many subjects. Galileo famously used it to prescribe how nature should be studied:

Philosophy is written in that great book which ever lies before our eyes—I mean the universe—but we cannot understand it if we do not first learn the language and grasp the symbols, in which it is written. This book is written in the mathematical language, and the symbols are triangles, circles and other geometrical figures, without whose help it is impossible to comprehend a single word of it.

By “philosophy” Galileo meant “natural philosophy,” but this term does not translate simply into our modern notion of science, or even physics. Galileo was speaking of two ways of knowing that at the time were generally taken to be distinct, the one called philosophy and the other mathematics.

The aim of philosophy was knowledge of causes and of the nature of things—what makes bodies move in certain ways, for example, and what they are made of. The aim of mathematics, on the other hand, was predictive knowledge—where you could expect to find Jupiter at any given time, say, rather than knowledge of what caused it to move in the heavens or what it was made of. Galileo understood and worked with this distinction, as did Isaac Newton in his 1687 The Mathematical Principles of Natural Philosophy. Their work is celebrated by many as the origins of modern science, but both Galileo and Newton puzzled some of their contemporaries, who thought that they had slipped into a confusion of disciplines. The subsequent career of this distinction is worth bearing in mind as we consider the moral bearings of scientific work: the natural philosopher occupied terrain shared with the theologian; the mathematician did not.

The trope of nature’s book was available in the seventeenth and eighteenth centuries to justify science to anyone who thought that it might make people irreligious. But there was little cause for worry. Robert Boyle, Robert Hooke, and Newton were far from alone among scientific practitioners who argued that their studies could not possibly have such an effect. Reading the Book of Nature, finding the expert interpretative code to do so, was precisely like reading Scripture. It was a way to God and to godliness. Boyle said he worked in his laboratory on Sundays because he saw his scientific work as a form of divine worship.

The movement “from Nature up to Nature’s God,” as Alexander Pope wrote in the 1730s, became one of the great cultural institutions of the period between the seventeenth and nineteenth centuries. Known as natural theology, some of its basic texts were read at Cambridge by the young Charles Darwin, who was deeply impressed by the power of the crucial “argument from design.” Take apart a watch, observe the superb adaptation of complex structure to function, and you cannot but conclude that it is the product of a designing intelligence. Reasoning in the same way about a natural structure, such as the eye of an insect, the natural theologian likewise concludes that such a thing must have been designed—but by divine, rather than human, intelligence.

For those who accepted natural theological modes of reasoning, science was a God-proving activity because it uncovered the evidence of intelligent design. It uplifted not only those who practiced it but also those who encountered its picture of the world in books and classrooms: they too learned to see divine design all about them. Inquiry within this framework rendered theology rational at the same time that it rendered science moral. It was a powerful and persistent cultural form.

Mathematical practice, as opposed to natural philosophy, did not participate in this theologically flavored enterprise. Even Galileo was able to insist on the difference between what he was doing and the proper and particular concerns of the Church. Under pressure from the Inquisition, he defended his Copernicanism by claiming that the heliocentric system might be mathematically useful even if it was not philosophically true. Calculating planetary positions simply went better on the Copernican model. He likewise marked the distinction between mathematics and theology when he said that the purpose of astronomy was to teach people the way the heavens go, not to teach them how to go to heaven.

The most philosophically consequential attack on these sensibilities relating science and virtue came from David Hume in the 1730s. He read a lot of theology and what we would now call sociology and was puzzled by how arguments in those fields tended to go. The writers would be describing social arrangements or the existence of God—following, Hume said, “ordinary ways of reasoning”—and then, all of a sudden, and without remarking on it, there would be an “imperceptible” change: the author would move from writing about what is or is not to writing about what ought to be or ought not be. But the is and the ought belong to different orders; it is “altogether inconceivable” that you could deduce the one from the other. The wider implications of Hume’s argument were obvious enough. If you can’t go from is to ought, then natural theology has no logical foundation: you can’t reason your way from nature to morality.

A similar strain of thinking emerged much later, in the early twentieth century, when philosophers formally identified the so-called naturalistic fallacy: the logical mistake of defining what is moral or good through such properties as pleasantness or desirability or instrumental advantage or, indeed, the natural itself. (Think, for example, of utilitarianism and its modern econometric progeny.) You can’t logically deduce the right thing to do by reducing it to properties that don’t belong to moral discourse.

The source of the Hume-like sentiments with which social scientists and historians are likely to be most familiar is Weber’s lecture “Science as a Vocation,” delivered in 1917 in Munich. The world, Weber said, has become “disenchanted.” In principle, everything can be known by rational calculation; there is nothing that is not calculable. Scientists may have once believed that they could show you the way to God or discover the “meaning” of creation, but not anymore, Weber said. “Except for some big children” still to be found in academic science departments, no one believed that science could be a way to God; it is in its very nature an “irreligious power.” If the sciences teach us anything about meaning, it is that we cannot get there from here. And if there is such a thing as the meaning of the world, there is no scientific way to discover it.

Weber represented what he was doing as science. He put himself in the same institutional and cultural boat as chemists and zoologists. Addressing the Munich students who were his audience, he said that people like them expected people like him to tell them what to do. But they were making a mistake. There was nothing in what he knew as a scientist that gave him any authority to define moral action, the right thing to do. If he did so, he would be abandoning the very thing that gave his calling its meaning. Putting himself professionally on the fact side of the fact-value distinction, Weber suggested that the only morality or meaning arising from the practice of science was the manly embrace of amorality and meaninglessness. Allying himself with Leo Tolstoy, he insisted that science gives no answer to the question “how to live”—or, as the existentialists later liked to say, “Everything has been figured out, except how to live.”

So natural science without the capacity of moral uplift, and grown-up scientists, so to speak, without moral authority, are—in historical terms—recent creations. Both the disenchantment of the world and the supposed invalidity of inferring ought from is derive from the historical development of a conception of nature stripped of the moral powers it once possessed. That development reached its culmination in the science and metaphysics of Darwin and the scientific naturalists of the late nineteenth century. Their modern conception of nature could not make those who studied it more moral than anyone else because no sermons in stones were to be discerned. Nature, said the great nineteenth-century biologist T. H. Huxley, “is no school of virtue.”

The insistence that science cannot make you good, or make the scientist into a moral authority, flowed from a natural philosophical position: there are no spiritual forces operating in nature and there is no divine meaning to be discerned in nature. That is to say, Weber was making a sociological statement about what belongs to certain social roles, but he was doing so by way of historical changes in science and metaphysics.

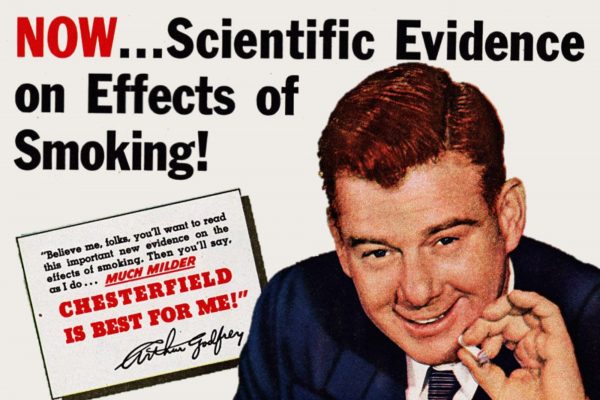

This attitude had significant ramifications. Sometime between the beginning and the middle of the twentieth century—especially in America but in other settings too—the idea of the scientist shed its remaining priestly associations, and a presumption of moral specialness gave way to moral ordinariness. There was no single cause of this change; shifting conceptions of the world that scientists interpreted had much to do with it. But it was accompanied by notable developments in the nature of the scientific career, in the social relations and cultural standing of the scientific community, and in changing academic and lay ideas about what sort of thing science was and what it was for.

Since WWII, scientific inquiry has increasingly merged with the goals of power and profit.

First, there were a lot more scientists by mid-century. The growth in those numbers was so remarkable that in the 1960s one sociologist predicted it would have to stop soon lest there be two scientists for every man, women, child, and dog in the country. In a demographic sense, the scientific career was becoming more normal and less of an oddity.

Second, the process of transforming scientific research from a calling to a job, from an amateur to a professional pursuit, was substantially completed in the twentieth century. Darwin never received a salary for his work. Even after the Second World War, and the increasing inclusion of American scientists in the materially comfortable middle classes, there were still researchers who expressed concerns about the rise of professionalism and the decline of scientific asceticism: the “true scientist,” the cancer researcher Frederick S. Hammett wrote in Science, is “only concerned with following his vocation.” And in the mid-1950s the physicist Karl Compton said of scientists in general, “I don’t know of any other group that has less interest in monetary gain.”

Third, by the early twentieth century, scientists were increasingly employed by research laboratories attached to large industrial corporations and government establishments, often with ties to the military. From the 1940s, American sociologists were beginning to give accounts of something newly designated as “the scientific community.” And while Merton discerned in the “norms” of this community many of the values of a liberal, meritocratic, and open society, he insisted that there is no “satisfactory evidence” that scientists are “recruited from the ranks of those who exhibit an unusual degree of moral integrity.” He urged that structural norms were not to be confused with psychological dispositions.

Finally, by the early 1960s, Thomas Kuhn’s picture of “normal science” portrayed scientific activity not as an open-minded philosophical quest but as puzzle-solving—the extension and application of existing paradigms. To the shock and indignation of some, Kuhn argued that being a scientist involved obedience to “dogma” and a narrowing of perception. Science remained, of course, the most reliable knowledge we had, but whatever moral authority might follow from regarding science as uniquely free of prejudice was—for those persuaded by Kuhn—no longer available.

In 1961 President Eisenhower’s Farewell Address identified the “military-industrial complex” as a new threat to both democracy and the integrity of science, further reflecting the distance science had traveled from an age when it was presumed a pursuit of special moral status. Senator J. William Fulbright’s later expansion to the “military-industrial-academic complex” recognized that universities were no longer to be thought of as disengaged ivory towers; they had become crucial resources for both the economy and the national security state. Hiroshima and the Cold War arms race propelled the issue of the social responsibility of science into prominence. Only when science had something terrible for which it might be held accountable could there be a serious debate about whether scientists were the sort of people who could or should take moral responsibility for the knowledge and artifacts they produced. Scientists had, for the most part, given up asserting their moral superiority; now, many of them argued that scientists should not be thought of as worse than anyone else. Robert Oppenheimer worried that he had “blood on his hands,” but many other scientists insisted that Hiroshima was not their fault: they were following democratically legitimate orders.

Post–World War II science had new power and enjoyed new scope. One measure of its enormous success was the extent to which it had come to be enfolded in the everyday institutions and practices of government, production, and war. Science’s goals were increasingly identified as their goals; its ways of doing things, their ways. One consequence is that a great deal of scientific inquiry has merged with institutions whose goals are presumed to include profit and power, not the disinterested search for truth—and certainly not moral uplift.

Much of the historical distinction between natural philosophy and mathematics reappears in more recent times as that between science and technology, the former aimed at knowledge for its own sake and the latter at power and control. Not so long ago—as evidenced by Weber’s 1917 lecture—this distinction was a matter of insistence: science was said to be misunderstood and demeaned by conflation with technology. Now, however, scientists and their paymasters work hard to identify science with technology, wanting nothing more than to have the authority of science supported by the utility of technology. This is one of the more visible signs of the folding of science into normal civic sensibilities. But when you model the search for knowledge on the search for power, you disrupt the historical association between the scientist and the priest and, substantially, between the idea of science and the idea of moral uplift.

Breaking that association has had its advantages. There are still many millions of Weber’s “big children” around who think that nature is a divine creation and that its study yields moral lessons, but few of them are now to be found in university physics and chemistry departments. (The disenchantment of the world looks more plausible within the confines of research universities than it does off campus.) So accepting that science, of course, cannot make you good is just an acknowledgment of the world’s disenchantment and of the massive achievements of amoral modern science. With the existentialists, “grown-ups” now recognize that solutions to problems of meaning and morality can come only from us and not from above—and certainly not from scientists. Morality cannot be outsourced.

Writing after World War II, Oppenheimer warned against thinking of scientists as having the answers to all questions or the power to solve them. If scientists were indeed the stewards of a unique, coherent, and powerful method, that stewardship showed, at most, in a certain modesty of manner and judgment, notably including humility about the scope of their knowledge. “Science is not all of the life of reason; it is a part of it,” he wrote. Scientism—the tendency to think one could extend scientific method everywhere and thereby solve problems of morality, value, aesthetics, and social order—was just sloppy thinking.

The scientism Oppenheimer warned against had a history. It traces back to nineteenth-century social Darwinism and the advertised reduction of morality to biology. This was exactly the sort of reasoning the naturalistic fallacy targeted—the notion that what was moral could be rendered in terms of what biological evolution had formed us to do or to feel. So if it was natural for us to war with each other in order to pass on characteristics to our offspring, then a moral problem was solved—that was what we should do. And if it was natural for us to cooperate or to behave altruistically to related or non-related others, then that too was what we should do. Moral instincts or inclinations were unveiled as natural phenomena, amenable to the methods and concepts of natural science. So-called evolutionary ethics bid to give a scientific solution to such questions as “What ought we to do?” and “What is moral?”

This Victorian scientism had a future, and it now has a substantial present. In the modern American academy and in intellectual publishing, scientism, and specifically the redefinition of moral problems as scientific problems, is resurgent. Moral problems are not so much solved as dissolved. One speaks of moral problems as une façon de parler, a regrettable modern survival of a discredited dualism. Science assumes, or reassumes, its moral role by showing that traditional moral authorities are naked, and that what counted as moral problems are best—even only—addressed by the resources of the scientist. Science, it is now claimed, will show us what is good and how to live the good life—and if it does not now have the ability to do so fully and effectively, then we should rest assured that it soon will. Science will cure problems of moral relativism, and it will reveal the objective truth of some set of moral positions as opposed to fraudulent others. Morality, neuroscientist Sam Harris writes, “should be considered an undeveloped branch of science,” and science, he says, “can determine human values.” The cognitive scientist Steven Pinker moves from a bet about the future to a confident, if qualified, statement of current realities:

The worldview that guides the moral and spiritual values of an educated person today is the worldview given to us by science. Though the scientific facts do not by themselves dictate values, they certainly hem in the possibilities. By stripping ecclesiastical authority of its credibility on factual matters, they cast doubt on its claims to certitude in matters of morality.

According to this newly confident scientism, science is the only bit of culture that can make you good because it trumps all the others—religion, traditional ethical codes, common sense. Or it shows them to be nonsense. Or—with or without awareness of the irony—it brands them immoral: religion is a “God delusion,” licensing prejudice, servility, and slaughter, all of which are morally wrong.

But there are several reasons why the ambitions of the new scientism may be self-limiting. Those who speak in the name of nature must face the fact that nature has never spoken with one voice. Different scientists draw different moral inferences from science. Some have concluded that it is natural and good to be ruthlessly competitive; others see it natural to cooperate and trust; still others embrace the lesson of the naturalistic fallacy and oppose the project of inferring the moral from the natural. That was the basis of T. H. Huxley’s skepticism in 1893:

The thief and the murderer follow nature just as much as the philanthropist. Cosmic evolution may teach us how the good and the evil tendencies of man may have come about; but, in itself, it is incompetent to furnish any better reason why what we call good is preferable to what we call evil than we had before.

Nor does the new scientism solve the long-standing problem of whom to trust. Just like every modern scientist, the advocates of the new scientism do what they can to sell their wares in the marketplace of credibility. And here the new scientism, for all its claims that there is a way science can make you good, shares one crucial sensibility with its opponents: having secularized nature, and sharing in the vocational circumstances of late modern science, the proponents of the new scientism can make no plausible claims to moral superiority, nor even moral specialness.

Resurgent scientism is less an effective solution to problems posed by the relationship between is and ought than a symptom of the malaise accompanying their separation. So there is a price to be paid for the of-courseness of the view that scientists are morally no better than anyone else, and among those paying it are scientists themselves. The idea that scientists are priests of nature, that they are morally uplifted by the study of God’s Book of Nature, may be dead—as Weber suggested, that is central to what modernity means—but the question of whether scientists are selflessly dedicated to truth remains alive and is central to contemporary tensions surrounding scientific expertise and public policy.

If the disinterestedness and selflessness of scientists can be no more relied on than that of bankers, then scientific conclusions should be no more trusted than financial derivatives, and science should be policed in the same way as the banking industry. Regimes of surveillance and control are a modern indication of distrust. Yet science, like the financial system, works on credit, and, while there is excellent sense in subjecting both scientific and financial conduct to a degree of regulation, there is no sense at all in thinking that surveillance can ever eliminate the need for trust. If you don’t find scientists trustworthy, if you think of them as mere servants of power and profit, then the ultimate price to be paid is that you’ll have to do the science yourself—and good luck to you in making your findings credible.

So the cost of modern skepticism about scientific virtue is paid not just by scientists but by all of us. The complex problems once belonging solely to the spheres of prudence and political action are now increasingly conceived as scientific problems: if the global climate is indeed warming, and if the cause is human activity, then policies to restrict carbon emissions are warranted; if hepatitis C follows an epidemiological trajectory resulting in widespread liver failure, then the high price of new drugs may be justified. The success of modern is-expertise has propelled it powerfully into the world of ought-judgment.

That is why there can be no glib “of course” about discarding the idea of scientific virtue. We need to trust scientists, but we need scientists to be trustworthy.