Humility has not always been among the popular virtues. While open-mindedness, curiosity, honesty, integrity, and perseverance are widely regarded as scientific virtues, humility is frequently left out. Nor has it enjoyed much success as a political virtue. Hume notoriously dismissed humility as a “monkish virtue,” arguing that it is neither useful nor agreeable to the self or to others. By contrast, Sheila Jasanoff aims not only to revive humility as a scientific and political virtue, but to make it central to society’s approach to dealing with disasters involving science and technology. An ethos of humility, she argues, is the key to dealing better with the next crisis that is sure to come.

The COVID-19 pandemic—like climate change, financial crises, hurricanes, and nuclear accidents—exposed the limits of scientific prediction and control. It was defined from the start by uncertainty, complexity, and the absence of adequate knowledge. Perhaps the one foreseeable thing was that there would be unforeseen events at every turn. Jasanoff grounds her plea for humility in the uncertainty and unpredictability that characterize such crises. The defining feature of humility, after all, is awareness of limitations—of one’s knowledge and ability to gain knowledge. Humility demands that we acknowledge the likelihood of mistakes and accept the possibility of defeat rather than being confident in our predictions and capacity for control.

To evaluate the aptness of humility as a response to uncertainty and non-knowing, we must first reflect on the source of the limitations that humility would have us confront. While I agree that some inherent limitations of scientific inquiry are appropriately faced with humility, I will argue that there is another kind of limitation for which humility is at best an inadequate response and at worst a counterproductive one. Jasanoff conflates the two in her essay; my aim here is to disentangle them.

It is almost a truism that scientific knowledge is inherently uncertain, fallible, and incomplete. The sources of this uncertainty can vary. It may be due to the limits of the evidence currently available: the evidence may be flawed in some way, or the information necessary to settle a scientific dispute may simply be lacking. Uncertainty might also stem from the complexity of natural or social processes under study: causal structures might not be fully understood, or they might involve such radical uncertainty that scientists might never be able to predict them with much success. Scientists themselves are usually the first to acknowledge uncertainty and non-knowing due to these limitations. They will admit that scientific beliefs at any given time are tentative and fallible and will change when the evidence changes. In the face of these limitations, it certainly makes sense to adopt an attitude of humility. We must pay attention to the possibility of mistakes and take precautions to make them less costly.

But knowledge and understanding can be uncertain—or entirely absent—in another way, too: because of choices made about what types of knowledge should be pursued, and how. Those in a position to choose which scientific questions to ask can thereby shape what counts as significant knowledge and what can be bracketed or left out altogether. Any decision about what knowledge to pursue is also a decision about what areas of uncertainty and ignorance we can live with—whose problems we can safely ignore. In short, the limits of our knowledge and ability to control disasters do not just arise from the intrinsic limits of human powers and the complexity of natural and social systems but also from choices about where to put our limited resources of time, money, and attention. What counts as a known unknown and what remains an unknown unknown are not intrinsic facts about the world; they are endogenous to choices about the knowledge scientists and other experts have chosen to pursue in the past. As a society, our ignorance has specific contours, and those who are involved in seeking knowledge and making policy have some control over what it looks like.

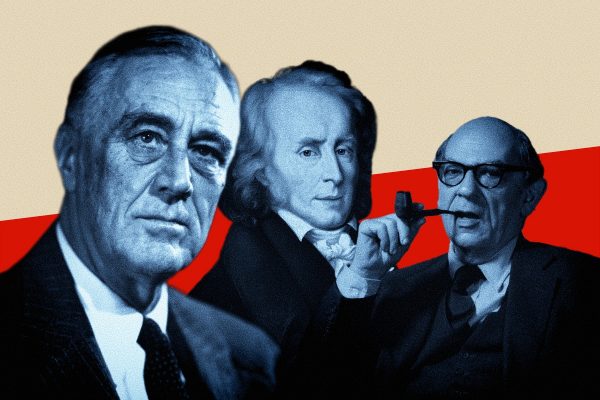

Jasanoff’s essay offers good examples of the kinds of knowledge that were notably absent from three major pandemic planning efforts. The Global Health Security Index model did not include variables on political polarization and trust, income inequality, racial disparities, or the marginalization of groups and regions in its calculations. The Obama administration’s playbook overestimated the nation’s willingness, as Jasanoff puts it, to “set aside politics and act in unison for the common good.” Fineberg’s call for unified command across the public and private sectors presupposed a United States that never was or had long ceased to be. All three assumed public buy-in and full compliance. Their assumptions about political unity were naïve. They did not pay enough attention to the needs of the most vulnerable communities: the poor, the elderly, Blacks, Hispanics, and Native Americans. Vaccine rollout plans did not adequately plan for vaccine hesitancy.

I could multiply the examples. Early COVID-19 models from Imperial College London and the University of Washington’s Institute for Health Metrics and Evaluation, which played a crucial role in shaping the policy response in the United States and the United Kingdom, focused on short-term health outcomes and neglected the economic and social impacts of policies. They failed to take a holistic approach to health and left out the mental and physical health toll of social isolation and a severe economic downturn, increased domestic violence and substance abuse rates, delayed treatments for other diseases, and missed vaccination schedules for children. They did not consider how social behavior and interaction patterns would affect infection rates. Nor were there enough studies about how COVID-19 was affecting different population subgroups along racial, ethnic, and class lines, and the differential impacts of lockdowns and school closures.

There is a clear pattern here. In all these cases, a different kind of knowledge that would have allowed for better planning could have been pursued but wasn’t. Consistently missing was knowledge about social and political factors; the needs of the most vulnerable communities historically neglected by science; human behavior and social interactions; a broader understanding of health, social scientific and humanistic approaches; as well as more interdisciplinary, responsive, and democratic sources of knowledge. The problem was not in the aspiration to preparedness but with a technocratic approach to preparedness that sidelined certain variables as irrelevant and ignored political realities and citizens’ needs.

In responding to this second type of failing—those not inherent to science but due to negligence or bad choices—humility may not be the right solution. Emphasizing the limits of scientific knowledge and calling for humility can have the effect of absolving scientists and government officials from responsibility for failing to produce the right kind of knowledge. It can serve to excuse not knowing what was in fact knowable, and not being prepared for what we should have seen coming. It can deflect citizens’ rightful criticisms by suggesting that laypeople simply fail to grasp the uncertainty and incompleteness of science. A blanket rhetoric of humility that does not distinguish between different reasons why our knowledge and capacity for control happen to be limited thus obscures the difference between limitations we could and could not have removed.

It may still be preferable to recognize and accept these limitations, but humility is not particularly useful for addressing and overcoming them. While it directs us to reflect on what we cannot do, it does not help us make better choices on things we can do. The need for humility arises when important questions have not been asked and attainable knowledge has not been pursued. This is not a state we must accept, but one we should learn from and leave behind. The more relevant virtue in preparing for the next crisis is willingness to improve, expand, and integrate our knowledge. The aim should be to produce a body of knowledge accountable to everyone, rather than responding with humility when it falls short in foreseeable ways.