Daron Acemoglu highlights the worrisome direction of AI development today—replacing jobs, increasing inequality, and weakening democracy. But he concludes by emphasizing that “the direction of AI development is not preordained.” I agree—and one way we must “modify our approach” is to think of AI less as a substitution for human work and more as a supplement to it.

The current narrative about AI puts it in competition with humans; we need to think instead about the possibilities—even the necessity—of human and AI collaboration. AI tends to perform well on tasks that require broad analysis and pattern recognition of massive data, whereas people excel, among other things, at learning from very limited data, transferring their knowledge easily to new domains, and making real-time decisions in complex and ambiguous settings.

Over the last decade, deep learning has shown great improvements in accuracy in many areas—including visual recognition of objects and people, speech recognition, natural language understanding, and recommendation systems—due to an explosion in data and computational power. As such, businesses across all sectors are trying to exploit AI and deep learning to improve efficiencies and productivity. This typically leads to automation of limited and repetitive tasks instead of looking holistically at the complete workflow, going beyond what is easy to automate, and figuring out how to solve the problem differently.

The automation-only approach indeed has its limits. First, such solutions tend to be fragile and don’t adapt well to specific environments or changes in tasks over time. Second, these solutions, trained on large datasets, often encounter cases they were not trained for, resulting in many failures. Current approaches focus on adding more data to the training, resulting in exponential growth of models and training requirements. In fact, one study on the computational efficiency of deep learning training showed that since 2012, the amount of computation used in the largest training runs has been increasing exponentially at a staggering rate, doubling roughly every 3.4 months. Finally, automation-only approaches tend to produce black boxes, without insights for users on how to interpret or evaluate their decisions or inferences—further limiting their usefulness.

What if we thought about work more holistically, as a collaboration of human and AI capabilities? In their 2018 Harvard Business Review study “Human + Machine: Reimagining Work in the Age of AI,” H. James Wilson and Paul Daugherty report that their research on 1,500 companies in 12 domains showed that performance improvement from AI deployments increased proportionally with the number of collaboration principles adopted. (The 5 principles studied included reimagining business processes, embracing experimentation/employee involvement, actively directing AI strategy, responsibly collecting data, and redesigning work to incorporate AI and cultivate related employee skills.) This improvement is not surprising when we consider the complementary nature of human and AI capabilities: people can train, explain, and help to sustain the AI system and ensure accountability, while AI can amplify certain cognitive strengths, interact with customers on routine tasks, help direct trickier issues to humans, and execute certain mechanical skills via robotics.

Systems that leverage the strengths of both can deliver compelling, efficient, and sustainable solutions in overall task performance, in training the AI system, in training people—or all of the above. But creating collaborative systems poses unique challenges we must invest in solving. These systems need to be designed to perceive, understand, and predict human actions and intentions, and to communicate interactively with humans. They need to be understandable and predictable—and to learn from and with users in the context of their deployed environments, in the midst of limited and noisy data.

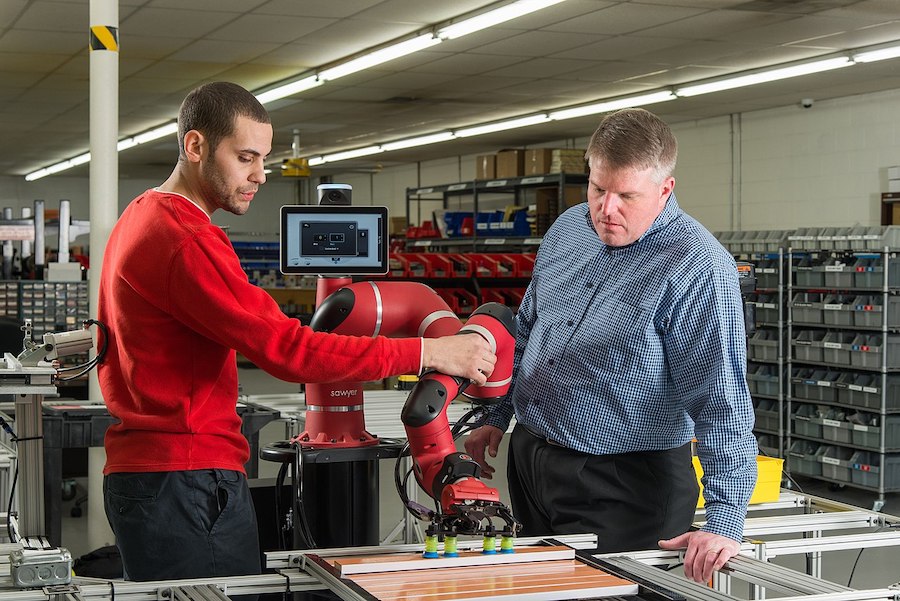

Consider manufacturing. While automation is changing the way we build factories, we still rely on humans in many tasks—especially in troubleshooting, machine repair, system configuration optimization, and decision-making. If an AI system can aid engineers and technicians in performing these tasks and making decisions—if it can observe and recognize their activities, understand the context, bring them relevant information in the moment, and highlight anomalies and discrepancies—people can make better decisions, reduce deviations, and fix errors sooner. In addition systems that collaborate with many users can recognize and draw attention to similarities and differences in human behavior, thus facilitating human learning across group of people who might have never worked together.

The AI system, in turn, can evolve over time with the user’s help. When a task changes, or procedures are modified, it can rely on the user’s input to modify its understanding, capture relevant examples, and retrain itself to adapt to the new setting. For this approach to work, the system needs certain capabilities. First, it needs to be able to observe users, infer their state robustly, and predict their intentions and actions—even their level of engagement and emotional state. Second, the system needs to communicate efficiently with users around a shared understanding of the physical world. People do this all the time when they work together; they refer to objects, gesture and point to resolve ambiguity, predict and anticipate future actions, and proactively intervene when necessary. We need to go beyond our current chat bots to multimodal, contextually aware, interactive systems. Third, the collaborative system needs to learn from users incrementally, constantly adjusting its perception and decision-making based on users’ input. It needs to have a principled understanding of its uncertainty and seek more data to improve its learning and explain its actions.

While achieving these capabilities might seem daunting, the payoffs will extend well beyond manufacturing. For example, research in education shows the high potential for human/AI collaboration to improve learning outcomes. Pedagogical research suggests student engagement and personalization result in better learning outcomes. Collaborative systems have the potential to help teachers understand the unique needs of every student and personalize their learning experience. Or, as in the kind of remote learning situations we are experiencing in the COVID-19 pandemic, AI systems might work with students as they perform different tasks, assisting them and providing insights to the teachers to help them address students’ different needs. This is even more critical in early childhood education. But, as in the case of manufacturing, these systems need to be able to understand the students actions, communicate with them in the context of their shared physical environment, and learn from the students and the teachers over time to develop robust capabilities. There are similar opportunities for AI systems with these capabilities, in elder care, in support for people with disabilities, and in health care.

If we want to change the prevailing narrative from human/AI competition to human/AI collaboration, we will no doubt face challenging design issues—as well as serious privacy and safety challenges. But success would mean higher value, more scalable and adaptive solutions, and better societal outcomes in the long run.