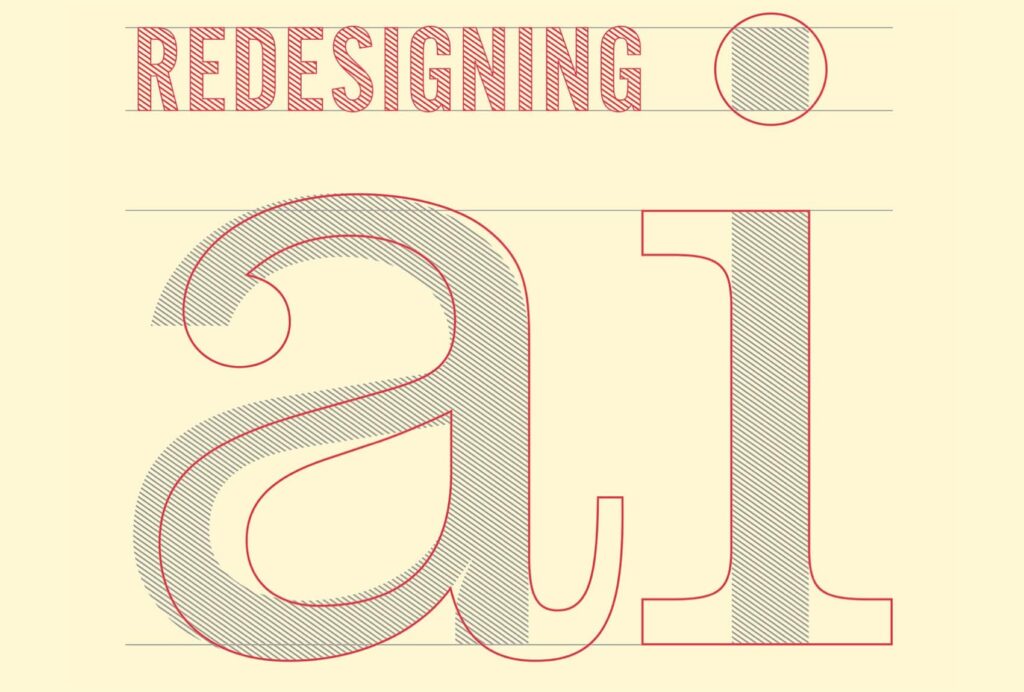

This note is featured in Redesigning AI.

Our world is increasingly powered by artificial intelligence. The singularity is not here, but sophisticated machine-learning algorithms are—revolutionizing medicine and transport; transforming jobs and markets; reshaping where we eat, who we meet, what we read, and how we learn. At the same time, the promises of AI are increasingly overshadowed by its perils, from unemployment and disinformation to powerful new forms of bias and surveillance.

Leading off a forum that explores these issues, economist Daron Acemoglu argues that the threats—especially for work and democracy—are indeed serious, but the future is not settled. Just as technological development promoted broadly shared gains in the three decades following World War II, so AI can create inclusive prosperity and bolster democratic freedoms. Setting it to that task won’t be easy, but it can be achieved through thoughtful government policy, the redirection of professional and industry norms, and robust democratic oversight.

Respondents to Acemoglu—economists, computer scientists, labor activists, and others—broaden the conversation by debating the role new technology plays in economic inequality, the range of algorithmic harms facing workers and citizens, and the additional steps that can be taken to ensure a just future for AI. Some ask how we can transform the way we design AI to create better jobs for workers. Others urge that we need new participatory methods in research, development, and deployment to address the unfair burdens AI bias has already imposed on vulnerable and marginal populations. Others argue that changes in social norms won’t happen until workers have a seat at the table.

Contributions beyond the forum expand the aperture, exploring the impact of new technology on medicine and care work, the importance of workplace training in the AI economy, and the ethical case for not building certain forms of AI in the first place. In “Stop Building Bad AI,” Annette Zimmermann challenges the belief that something designed badly can later be repaired and improved, an industry-wide version of the Facebook motto to “move fast and break things.” She questions whether companies will police themselves, and instead calls for new frameworks for determining what kinds of AI are too risky to be designed in the first place.

What emerges from this remarkable mix of perspectives is a deeper understanding of the current challenges of AI and a rich, constructive, morally urgent vision for redirecting its course.