Rare is the CEO today who, in the face of public concern about a potentially dangerous product, says, “Let’s hire the best scientists to figure out if the problem is real and then, if it is, stop making this stuff.”

In fact, evidence from decades of corporate crisis behavior suggests exactly the opposite. As an epidemiologist and the former U.S. Assistant Secretary of Labor for Occupational Safety and Health under President Obama, I have seen this behavior firsthand. The instinct for corporations is to take the low road: deny the allegations, defend the product at all costs, and attack the science underpinning the concerns. Of course, corporate leaders and anti-regulation ideologues will never say they value profits before the health of their employees or the safety of the public, or that they care less about our water and air than environmentalists do. But their actions belie their rhetoric.

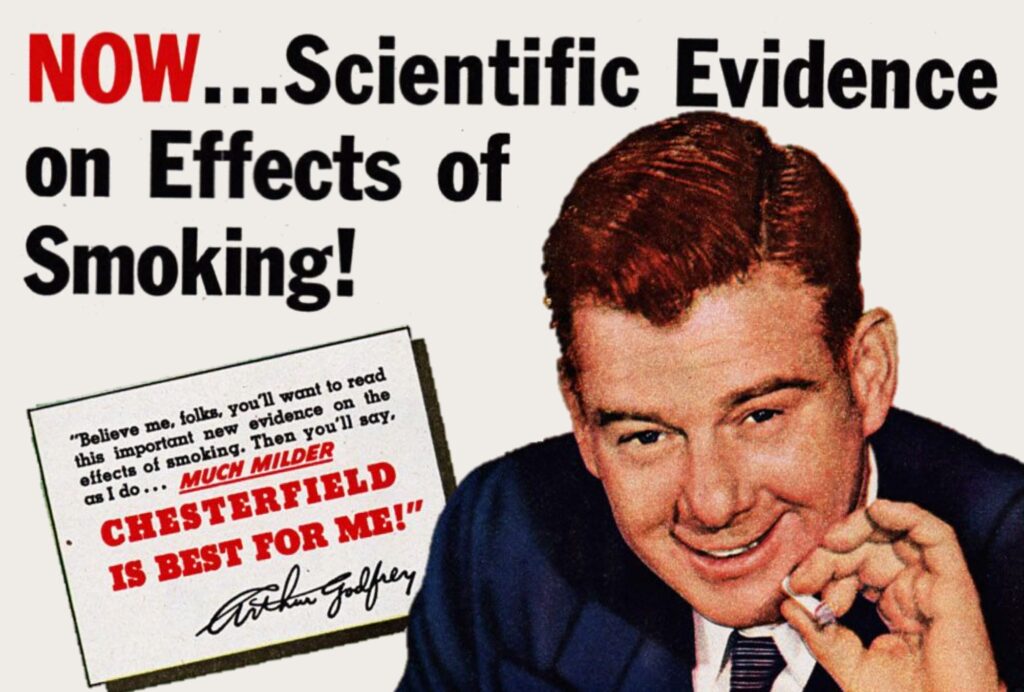

Tobacco’s uncertainty campaign from the 1950s is serving as the template for corporate behavior once again in 2020: dark money rules.

Decision makers atop today’s corporate structures are responsible for delivering short- and long-term financial returns, and in the pursuit of these goals they place profits and growth above all else. Avoidance of financial loss, to many corporate executives, is an alibi for just about any ugly decision. This is not to say that decisions at the highest level are black-and-white or simple; they are dictated by factors such as the cost of possible government regulation and potential loss of market share to less hazardous products. And, of course, companies are afraid of being sued by people sickened by their products, which costs money and can result in serious damage to the brand. All of this is part of the corporate calculus.

Unfortunately, though, this story is old news: most people, especially Americans, have come to expect corporations to put profit above all else. Still, we mostly don’t expect there to be mercenary scientists. Science is supposed to be constant, apolitical, and above the fray. This commonsense view misses the rise of science-for-sale specialists over the last several decades and a “product defense industry” that sustains them—a cabal of apparent experts, PR flaks, and political lobbyists who use bad science to produce whatever results their sponsors want.

• • •

There are a handful of go-to firms in this booming field. Consider, as a silly but representative example, the “Deflategate” controversy in the National Football League (NFL)—the allegations that New England Patriots quarterback Tom Brady directed that footballs be deflated during a 2014 championship game. As part of the ensuing investigation, NFL Commissioner Roger Goodell hired an attorney who in turn hired Exponent, one of the nation’s best-known and most successful product defense firms.

Science is supposed to be constant, apolitical, and above the fray. This commonsense view misses the rise of science-for-sale specialists over the last several decades—and a “product defense industry” that sustains them.

These operations have on their payrolls—or can bring in on a moment’s notice—toxicologists, epidemiologists, biostatisticians, risk assessors, and any other professionally trained, media-savvy experts deemed necessary (economists too, especially for inflating the costs and deflating the benefits of proposed regulation, as well as for antitrust issues). Much of their work involves production of scientific materials that purport to show that a product a corporation makes or uses or even discharges as air or water pollution is just not very dangerous. These useful “experts” produce impressive-looking reports and publish the results of their studies in peer-reviewed scientific journals (reviewed, of course, by peers of the hired guns writing the articles). Simply put, the product defense machine cooks the books, and if the first recipe doesn’t pan out with the desired results, they commission a new effort and try again.

I describe this corporate strategy as “manufacturing doubt” or “manufacturing uncertainty.” In just about every corner of the corporate world, conclusions that might support regulation are always disputed. Studies in animals will be deemed irrelevant, human data are dismissed as not representative, and exposure data are discredited as unreliable. Always, there’s too much doubt about the evidence, and not enough proof of harm, or not enough proof of enough harm.

This ploy is public relations disguised as science. Companies’ PR experts provide these scientists with contrarian sound bites that play well with reporters mired in the trap of believing there must be two sides to every story equally worthy of fair-minded consideration. The scientists are deployed to influence regulatory agencies that might be trying to protect the public, or to defend against lawsuits by people who believe they were hurt by the product in question. Corporations and their hired guns market their studies and reports as “sound science,” but in reality they merely sound like science. Such bought-and-paid-for corporate research is sanctified, while any academic research that might threaten corporate interests is vilified.

Always, there’s too much doubt about the evidence, and not enough proof of harm, or not enough proof of enough harm.

Individual companies and entire industries have been playing and fine-tuning this strategy for decades, disingenuously demanding proof over precaution in matters of public good. For industry, there is no better way to stymie government efforts to regulate a product that harms the public or the environment; debating the science is much easier and more effective than debating the policy. In earlier decades—as documented in detail by a great deal of scholarship, including Naomi Oreskes’s and Erik Conway’s Merchants of Doubt: How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming (2010) and my earlier book Doubt is Their Product: How Industry’s Assault on Science Threatens Your Health (2008)—we have seen this play out with tobacco, secondhand smoke, asbestos, industrial pollution, and a host of chemicals and products. These industries’ strategy of denial is still alive and well today. Nor is this practice of hiring experts and hiding data about harms limited to health concerns and the environment. Beyond toxic chemicals, we see it with toxic information as well. (Consider the corporate misbehaviors of Facebook.)

This is not to assert that the conclusions of every study or report produced by product defense experts are necessarily wrong; it certainly is legitimate for scientists to work to prove one hypothesis in the cause of disproving another. One means by which science moves toward the real truth is by challenging and disproving supposed truth and received wisdom. Maybe there are two sides to every story—but maybe not two valid sides, and definitely not when one has been purchased at a high price, and produced by firms whose financial success rests on delivering the studies and reports that support whatever conclusion their corporate clients need.

The strategy of manufacturing doubt has worked wonders, in particular, as a public relations tool in the current debate over the use of scientific evidence in public policy. In the long run, product defense campaigns rarely hold up; some don’t pass the laugh test to begin with. But the main motivation all along has been only to sow confusion and buy time, sometimes lots of time, allowing entire industries to thrive or individual companies to maintain market share while developing a new product. Doubt can delay or obstruct public health or environmental protections, or just convince some jurors that the science isn’t strong enough to label a product as responsible for terrible illnesses.

Corporations and their hired guns market their studies and reports as “sound science,” but in reality they merely sound like science.

Eventually, as the serious scientific studies get stronger and more definitive, and as the corporate studies are revealed as unconvincing or simply wrong (then generally forgotten, with the authors paying no penalty for their prevarications), the manufacturers give up and acknowledge the harm done by their products. Then they submit to stronger regulation, sometimes even costing themselves more money than they would have paid in the first place. But they can do the math: they have also been making a lot of money for all those years. Their wealth compounds. And as for the people who have been sickened or worse in the interim? Or the despoiled environment? Well, those are unfortunate. Sorry.

And what happens to the product defense firms? There’s always another opportunity to manipulate the vulnerabilities at the intersection of science and money. In Deflategate, Exponent’s official report enabled the NFL’s attorneys to assert, four months after the game in question was played, that experts found “no set of credible environmental or physical factors” that could completely account for the change in pressure of the Patriots’ footballs. Combined with other circumstantial evidence, “it was more probable than not” that the balls were intentionally deflated. To be fair, the NFL also relied on text messages and other evidence suggesting that the game balls may, in fact, have been deflated. But Exponent had done its job, providing the conclusion that was useful to the NFL to build the case for the quarterback’s guilt.

Yet Exponent’s report to the owners ended up being an embarrassment to the NFL. John Leonard, a roboticist and mechanical engineering professor at the Massachusetts Institute of Technology, was one of the early skeptics about Exponent’s work and conducted a series of analyses that demonstrated that the original calculations were incorrect and that “no nefarious deflation actually occurred.” Leonard’s very compelling lecture on YouTube has been viewed more than 500,000 times. Since he lives and works in Massachusetts, Leonard could be suspected of bias, but he turns out to be a turncoat in this regard: he roots for the Philadelphia Eagles. Nor is he alone in his criticisms of the Exponent report. Faculty at Carnegie Mellon, the University of Chicago, Rockefeller University, and other academic centers have all pointed out errors in the report. So this was not the product defense industry’s finest hour—just an indicative one, and one that received more national attention than most.

• • •

It is not an exaggeration to say that in the product defense model, the investigator starts with an answer, then figures out the best way to support it. As often as not, the product defense investigator starts with someone else’s answer, then reviews the evidence or subjects an important study to a post-hoc “re-analysis” that magically produces the sponsor’s preferred conclusions—that the risk is not that high, the harm not that bad, or the data fatally flawed (or maybe all of these at once). These are the studies that are flogged to regulatory agencies or in litigation.

Recognizing these firms’ methods can be tremendously valuable in trying to frame public discourse in today’s toxic political environment. What follows is a kind of disinformation playbook: a field guide to the way science gets sold.

The Weight of the Evidence

One popular tactic—maybe the most popular—is some version of “reviewing the literature.” The basic idea is valid; we do need to consider the scientific studies to date to attempt to answer important questions. The questions that come up in regulation and litigation are complex; they go way beyond simply asking, “Does this chemical cause cancer or lower sperm count or cause developmental damage?” With public health issues, the important and tricky part is determining at what level an exposure can contribute to the undesired effect, and after how much time and exposure. Is there a safe level of exposure, below which a chemical cannot cause disease (or has not, in the case of litigation)? No single study answers such questions, so reviews are warranted.

Individual companies and entire industries have been playing and fine-tuning this strategy for decades, disingenuously demanding proof over precaution in matters of public good.

Sometimes these literature reviews are labeled “weight-of-the-evidence” analyses, with the authors deciding how much importance to give each study. But if your business model—your whole enterprise—is based on being paid by the manufacturers of the product in question for those reviews, your judgment is suspect by definition. If a review was undertaken by conflicted scientists in business to provide conclusions needed by a commercial sponsor to delay regulation or defeat litigation, the findings are tainted and should be discarded. How can we know whether the weight they have assigned different studies, intentionally or unconsciously, is impacted by the fact that their sponsors want a certain result?

The Risk Assessment

Weight-of-the-evidence reviews generally include both human and animal studies, and the attribution of weight to any given study is generally a subjective, qualitative decision. A more quantitative approach to reviewing the literature entails so-called risk assessment, which in its earnest form attempts to provide estimates of the likelihood of effects at different exposure levels. Importantly, risk assessments attempt to estimate the levels below which exposure to a given substance will cause no harm. But as William Ruckelshaus, the first head of the Environmental Protection Agency, famously said, “Risk assessment data can be like the captured spy: if you torture it long enough, it will tell you almost anything you want.”

This much is true: there is tremendous variation in the results of many risk assessments. There are also individual scientists and firms who can be counted on to produce risk assessments that, conveniently for their sponsors, find significant risk only at levels far above the levels where most exposures are occurring. And if these risk assessments are accepted by regulatory agencies or jurors, the sponsors will be required to spend far less money cleaning up their pollution or compensating victims.

The Re-analysis

By its nature, epidemiology is a sitting duck for the product defense industry’s uncertainty campaigns. Studies in the field are complicated and require complex statistical analyses. Judgment is called for at every step along the way, so good intentions are paramount. Both epidemiologic principles and ethics require that the methods of analysis be selected before the data are actually analyzed. One tactic used by some of the product defense firms is the re-analysis, where the raw data from a completed study are looked at again, changing the way the data are analyzed, often in the most mercenary of ways. The joke about “lies, damned lies, and statistics” pertains.

The battle for the integrity of science is rooted in these sorts of issues around methodology. If a scientist with a certain skill set knows the original outcome and how the data are distributed within the study, it is easy enough to design an alternative analysis that will make positive results disappear. This is especially true with findings that link a toxic exposure to disease later on—which also happen to be among the most important results for public health agencies. In contrast, if there is no effect from exposure, post hoc analysis to turn a negative study positive is generally difficult and often not possible, since the effect of interest is equally distributed across all parts of the study population.

The battle for the integrity of science is rooted in these sorts of issues around methodology.

As with most things about product defense, the re-analysis strategy dates back to the tobacco industry, whose strategists recognized that they needed a means to counter early findings related to smoking’s dangers, in order to shirk responsibility and regulation for lung cancer risk among nonsmoking spouses of smokers. From a public health perspective, a 25 percent increase in cancer risk is a big deal. To industry, making it disappear would be a huge deal, and that is why they brought in the re-analysts. The strategists also realized that they couldn’t mount their own studies, which would take years and millions of dollars, so they figured they could get the raw data from the incriminating studies, change some of the basic assumptions, change the parameters, tinker with this and that, and make the results go away. Tobacco’s approach is now commonplace; “re-analysis” is its own cottage industry within product defense.

The Back-in-Time Simulation

In many mercenary re-analyses of epidemiologic studies that find increased risk of disease associated with low levels of exposure to a given chemical, the product defense scientists decide that the actual exposures in the study were in fact far higher than those estimated by the scientists who did the original study. This is farcical, of course, but highly useful: a retrospective adjustment to the exposure level is guaranteed to change the results of the study, making the exposure look safer because now only those higher levels cause disease. And, of course, the conflicted scientists doing the reanalysis know very well this is exactly what will happen if they juice up the exposure estimates.

But when there is no longer debate whether exposure to a substance causes disease at a given level, a firm whose product is under attack may want to show that historical exposures were lower, not higher. These types of studies, usually conducted by attempting to recreate historical exposure levels in a laboratory, are generally done only for high-stakes court cases, since there is little if any scientific interest in revisiting settled science around old exposure levels. The basic model sometimes involves finding the original product—often that is no longer manufactured or in use—and then simulating the exposure that a plaintiff in a court case would have experienced decades earlier. Pretty much the only reason these studies get published in a scientific journal is so the expert can testify that their study was peer-reviewed.

The “Independence” Gambit

Many papers produced by the product defense firms contain the disclosure that individual scientists may be testifying for the corporations being sued, but that the research itself was done independently of the corporations. This sleight-of-hand provides a fiction of independence that might provide a fiction of objectivity, but the research was almost certainly paid for by the product defense firm out of the fees it was paid by the corporation. It is a charade, but also standard practice.

Front Groups

A different kind of conflict of interest, and a different kind of disclosure trickery, is the use of front groups by many industries to advance their interests while hiding their involvement. These fronts are generally incorporated as not-for-profits, with academic scientists in leadership, and innocuous-sounding names, but they are bought and paid for by their various corporate sponsors, many of them sponsoring “research” to be used in regulatory proceedings or the court. In addition, there are the all-corporate-purpose think tanks devoted to “free enterprise” and “free markets” and “deregulation.” Dozens of them work on behalf of just about every significant industry in this country. Each year these entities collect millions of dollars from regulated companies to promote campaigns that weaken public health and environmental protections.

Always, the idea is to portray front groups as serious, independent purveyors of scientific research. And some do produce legitimate science, while at the same time producing purely questionable science that its sponsoring organizations rely on to promote their unhealthy products. It’s a delicate balancing act.

• • •

These methods seriously threaten the progress made over the last several decades in protecting the science underpinning our public health and environmental protections. Tobacco’s uncertainty campaign from the 1950s is serving as the template for corporate behavior once again in 2020. Dark money rules. Corporations or rich individuals pour money into organizations set up as “educational” nonprofits, whose objective is to sow confusion and uncertainty on climate change, toxic chemicals, or the health effects of soda or alcoholic beverages. There is no easy way to find out the hidden funders of some of these groups. Secrets abound, and much of what we have learned comes from either lawsuits or, occasionally, careless mistakes in which donors are identified by accident.

There is no question that if these “uncertainty” campaigns had not been waged, we would have a far healthier population and a cleaner environment.

Manufactured doubt is everywhere, defending dangerous products in the food we eat, the beverages we drink, and the air we breathe. The direct impact is thousands of people needlessly sickened. There is no question that if these “uncertainty” campaigns had not been waged, we would have a far healthier population and a cleaner environment. Following the U.S. election of Donald Trump, the fundamentals of evidence-based policymaking came under unprecedented attack. Just as unwelcome news became fake news, unwelcome science became fake science. Incredibly, the federal government elevated studies conjured by product defense specialists over the studies done by independent, academic scientists. Worse, perhaps, the scientists whose careers have been defined by their science-for-sale studies exonerating toxic chemicals were brought inside, running or advising the very agencies that regulate those chemicals.

All this backsliding demands our action and attention. The scientific enterprise, like democratic society itself, is at a crossroads. This is an opportune moment—the necessary moment—to look hard at how science can be used to protect our health and planetary well-being, but also misused to damage them.

Adapted from THE TRIUMPH OF DOUBT: Dark Money and the Science of Deception by David Michaels. Copyright 2020 by David Michaels and published by Oxford University Press. All rights reserved.