On January 29 the Hubei Daily, a state-owned Chinese newspaper based in Wuhan, reported on a promising development. Teams of researchers associated with the Chinese Academy of Military Medical Sciences and the Wuhan Institute of Virology had tested dozens of existing pharmaceuticals for possible efficacy against the novel coronavirus that causes COVID-19. They had identified three antiviral drugs that seemed to inhibit the virus from reproducing or infecting other cells in a test tube.

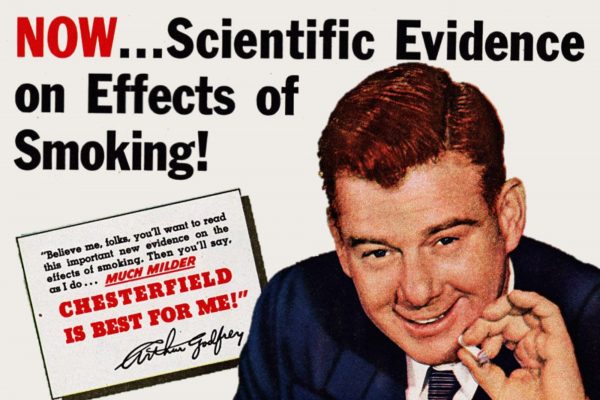

Telling one isolated truth, rather than the whole truth, can be just as bad as telling a falsehood.

Within a week the highly regarded journal Cell Research published a peer-reviewed letter by researchers at the Wuhan Institute of Virology that reported on two of these in more detail: chloroquine, developed in the 1930s to treat malaria, and remdesivir, a newer drug developed for Ebola. Within days Chinese researchers announced new clinical studies to test these drugs in patients, along with another antimalarial drug, hydroxychloroquine, which is derived from chloroquine and is generally considered safer. The science has continued apace, and results of most of the clinical studies are still pending.

In the meantime, something strange happened. It started with a series of tweets. On March 11 an Australian entrepreneur living in China tweeted at a Bitcoin investor that chloroquine would “keep most people out of hospital.” That investor then co-authored and shared a document making the case for chloroquine. On March 16 Elon Musk began tweeting about chloroquine and hydroxychloroquine and shared that document. Two days later Tucker Carlson did a segment on Fox News discussing these drugs with one of the document’s co-authors. That same day, March 19, President Trump gave a press conference in which he announced that chloroquine and hydroxychloroquine had shown “very, very encouraging” early results. Since then, Trump has repeatedly touted hydroxychloroquine as a COVID-19 miracle drug.

Over the following weeks, the question of whether hydroxychloroquine is a safe and effective treatment for COVID-19 became a locus for political tribalism and polarization. Trump supporters on social media share evidence, often anecdotal or clinical, that hydroxychloroquine is effective; Trump’s critics share evidence that it is not and argue there are significant costs to promoting an unproven drug. Even traditional media has weighed in. The right-leaning Wall Street Journal published an opinion piece by doctors supporting the use of the drug; the left-leaning Washington Post emphasized that there are warnings from medical experts about “dangerous consequences” of using it to treat COVID-19.

Of course, polarization is hardly a new phenomenon in the United States. Growing polarization over political values has bled into polarized beliefs about matters of fact, from the relative sizes of Trump and Obama’s inauguration crowds to what the U.S. unemployment rate actually is. And issues of both established and ongoing scientific research are not immune: just consider polarization over global climate change, evolutionary theory, and vaccine safety.

This essay is featured in Thinking in a Pandemic.

With the arrival of COVID-19, new opportunities for polarization have emerged. Recent surveys have found a stark divide, with Democrats consistently expressing greater concern about the seriousness of COVID-19, while Republicans are more likely to think it is exaggerated. More Democrats report taking precautions such as avoiding crowds and washing their hands. And these differences seem to extend to specific matters of fact, such as the efficacy of hydroxychloroquine.

Yet with all this polarization, there is still something distinctive and puzzling about these disagreements over COVID-19. Most notable cases of polarization over matters of fact have relatively mild day-to-day consequences. Nobody dies from skepticism about evolution. And while skepticism about global climate change or vaccines may ultimately cause significant harms, long time scales in the first case and herd immunity in the second help to protect non-believers from immediate consequences. Given that COVID-19 can kill within a matter of weeks, and that bad choices can put a person in immediate danger, one might think there would not be much room for tribalism. After all, we do not expect polarization over whether, say, drinking antifreeze—or injecting disinfectants, for that matter—is a good idea.

Why are we seeing the polarization over hydroxycholorquine, then, in spite of the serious consequences? The explanation may lie in the kind of information available to the public about COVID-19, which differs importantly from what we see in other cases of polarization about science. When it comes to the health effects of injecting disinfectants, there is no uncertainty about the massive risks. And for that reason, we don’t expect polarization to emerge, even if Trump suggests trying it. But even the best information about COVID-19 is in a state of constant flux. Scientists are publishing new articles every day, while old articles and claims are retracted or refuted. Norms of scientific publication, which usually dictate slower timeframes and more thorough peer review, have been relaxed by scientific communities desperately seeking solutions. And with readers clamoring for the latest virus news, journalists are on the hunt for new articles they can report on, sometimes pushing claims into prime time before they’ve been properly vetted.

All this means that there is a huge amount of information circulating that has some scientific legitimacy but that may be dramatically underdeveloped and more likely than normal scientific findings to be overturned. Claims about hydroxychloroquine fall into this category. Despite widely reported but hardly definitive recent studies, which Trump’s media critics have latched onto as evidence that hydroxychloroquine does not improve outcomes, the scientific jury is still out. We do not yet know whether hydroxychloroquine, remdesivir, or other possible treatments are effective for COVID-19.

This legitimate uncertainty means that pundits and journalists who treat claims supporting hydroxychloroquine as akin to typical misinformation (or radical conspiracy theories) are misdiagnosing the situation. Trumpeting hydroxychloroquine is undoubtedly risky, both because current evidence is too mixed to support that claim and because it can lead to problems like drug hoarding. But sharing anecdotal accounts of the success of hydroxychloroquine in various clinical settings is not necessarily misinformation—and neither is sharing information about failed clinical trials or shortages for patients who need the drug for other purposes. These are all pieces of evidence that should inform any reasonable person’s beliefs about hydroxychloroquine and COVID-19.

This is not to say that nothing has gone badly wrong with the public discourse about the drug. Amidst a sea of uncertainty, people are deciding which way to swim by attending to social factors, rather than scientific ones.

Part of the reason this happens is that facts can mislead when they are shared with incomplete context or without other relevant facts. Telling one isolated truth, rather than the whole truth, can be just as bad as telling a falsehood. This is especially true for issues related to human health, where data is often messy. Some COVID-19 patients who take hydroxychloroquine will recover; some will die. What is difficult to determine is how many who recover would have recovered anyway, and how many who die after taking the drug would have died anyway. In the absence of high-quality studies, determining the proper context for any fact is exceptionally difficult, and even experts struggle to do it well.

The upshot is perhaps counterintuitive: people can wind up misinformed even in the absence of misinformation. Or maybe it is best to think of misinformation as a function of the whole ecology of information available—how it is framed, who shares it, where it gets circulated, and so on—not simply a matter of isolated claims being true or false, more justified or less.

In particular, people become misinformed because they tend to trust those they identify with, meaning they are more likely to listen to those who share their social and political identities. When public figures such as Donald Trump and Rush Limbaugh make claims about hydroxychloroquine, Republicans are more likely to be swayed, while Democrats are not. The two groups then start sharing different sorts of information about hydroxychloroquine, and stop trusting what they see from the other side.

People also like to conform with those in their social networks. It is often psychologically uncomfortable to disagree with our closest friends and family members. But different clusters or cliques can end up conforming to different claims. Some people fit in by rolling their eyes about hydroxychloroquine, while others fit in by praising Trump for supporting it.

These social factors can lead to belief factions: groups of people who share a number of polarized beliefs. As philosophers of science, we’ve used models to argue that when these factions form, there need not be any underlying logic to the beliefs that get lumped together. Beliefs about the safety of gun ownership, for example, can start to correlate with beliefs about whether there were weapons of mass destruction in Iraq. When this happens, beliefs can become signals of group membership—even for something as dangerous as an emerging pandemic. One person might show which tribe they belong to by sewing their own face mask. Another by throwing a barbeque, despite stay at home orders.

And yet another might signal group membership by posting a screed about hydroxychloroquine. There is nothing about hydroxychloroquine in particular that makes it a natural talking point for Republicans. It could just as easily have been remdesivir, or one of a half dozen other potential miracle drugs, that was picked up by Fox News, and then by Trump. The process by which Trump settled on hydroxychloroquine was essentially random—and yet, once he began touting it, it became associated with political identity in just the way we have described. (That is not to say that Trump and his media defenders were not on the lookout for an easy out from a growing crisis. Political leaders around the world would love to see this all disappear, irrespective of ideology.)

Misinformation is a function of the whole ecology of information available, not simply a matter of isolated claims being true or false.

Sharing encouraging news about possible treatments for a devastating disease is an appropriate thing for political leaders or public health officials to do under many circumstances. But it must be done with utmost care, not only because the information politicians share may directly influence others’ behaviors in dangerous ways, but also because the very fact that a politician or government expert is perceived as representing a particular political tribe can mean their information becomes attached to their positions in preexisting disagreements. The result is that some substantial portion of the population—and in the case of hydroxychloroquine, we still do not know whether it will be Republicans or Democrats—ends up inappropriately skeptical about an important matter of fact.

This tribalism about COVID-19 may be exacerbated by media practices. There is tremendous interest in the disease, and thus tremendous opportunity for journalists to capture readership. Readers are drawn to claims that are surprising and novel, including those that emphasize extreme events. For instance, we see many articles about the most overwhelmed hospitals in the world and the worst-case scenario predictions for COVID-19 deaths, even when many other hospitals in the same regions are not overwhelmed and well-informed predictions of total fatalities vary widely. By contrast, evidence that fits neatly into our current, best theories of COVID-19 is relatively underreported in the mainstream news.

This bias toward extremes means that once opposing camps have formed, there is a lot of fodder for each side to appeal to as evidence of bias. Furthermore, with COVID-19, it is often the case that the different groups only trust one of the extremes. Extremity bias can thus amplify polarization, especially in an already factionalized environment.

The end result is that even without misinformation, or with relatively little of it, we can end up misinformed. And misinformed decision makers—from patients, to physicians, to public health experts and politicians—will not be able to act judiciously. In the present crisis, this is a matter of life and death.

There are no easy solutions to polarization, writ large. Telling journalists not to report on extreme events is hopeless, though we might do well to call for more nuanced and contextualized reporting—telling the whole truth, rather than some isolated part of it. Politicians, for their part, have plenty of incentives for playing up polarization. But individuals, including physicians and others whose expertise we rely on, can resist, by attempting to recognize the ways that their own belief factions may be distorting the evidence they see and trust. Perhaps more importantly, we must recognize that not everything shared or believed by those with whom we disagree is misinformation, even if it later turns out to have been false.