This essay appears in print in Thinking in a Pandemic.

COVID-19’s impact has been swift, widespread, and devastating. Never have so many clinicians devoted themselves so resolutely, at significant personal risk, to care for people with a single disease that is not yet in the textbooks. Never have so many scientists worked so fast to generate and summarize research findings in real time. Never have policymakers struggled so hard to apply a complex and contested evidence base to avert an escalating crisis.

COVID-19 is the biggest comparative case study of “evidence-based” policymaking the world has ever known.

And yet, as I write from Oxford, the United Kingdom’s daily death toll remains in the hundreds despite weeks of lockdown. In this country at least, we are failing. One of the many issues thrown up by the current confusion is the relationship between research and policy—a matter recently explored in an exchange in these pages. A leitmotif in the UK government’s announcements, in particular, is the claim that in their response to COVID-19 they are “following the science.” One policy delay after another—testing at ports and airports, quarantining of arrivals from known hotspots, closing schools, banning large gatherings, social distancing, lockdown, controlling outbreaks in care homes, widespread testing, face coverings for the lay public—has been endorsed by the government and its formal advisory bodies only on the grounds that “the evidence base is weak” or even that “the evidence isn’t there” (see links for quotes to this effect). The implication is that good policy must wait for good evidence, and in the absence of the latter, inaction is best.

In all the above examples, some evidence in support of active intervention existed at the time the government made the decision not to act. In relation to face coverings, for example, there was basic science evidence on how the virus behaves. There were service-level data from hospital and general practitioner records. There were detailed comparative data on the health system and policy responses of different countries. There were computer modeling studies. There was a wealth of anecdotal evidence (for example, one general practitioner reported deaths of 125 patients across a handful of residential care homes). But for the UK government’s Scientific Advisory Group for Emergencies (SAGE), whose raision d’être is “ensuring that timely and coordinated scientific advice is made available to decision makers,” this evidence carried little weight against the absence of a particular kind of evidence—from randomized controlled trials and other so-called “robust” designs.

We could be waiting a very long time and paying a very high human price for the UK government’s ultra-cautious approach to “evidence-based” policy. Indeed, I hypothesize that evidence-based medicine—or at least, its exalted position in the scientific pecking order—will turn out to be one of the more unlikely casualties of the COVID-19 pandemic.

The term “evidence-based medicine” was introduced in 1992; it refers to the use of empirical research findings, especially but not exclusively from randomized controlled trials, to inform individual treatment decisions. As a school of medical thinking, it is underpinned by a set of philosophical and methodological assumptions. It embraces an intellectual and social movement with which many are proud to identify. Indeed, I’ve identified with it myself. I have been involved with the movement from the early 1990s; my book How to Read a Paper: The Basics of Evidence-Based Medicine and Healthcare has sold over 100,000 copies and is now in its sixth edition. In my day job at the University of Oxford I help run the Oxford COVID-19 Evidence Service, producing rapid systematic reviews to assist the global pandemic response, though I’ve also led some strong critiques of the movement.

Through its highly systematic approach to the production of systematic reviews and the development and dissemination of clinical practice guidelines, evidence-based medicine has saved many lives, including my own. Five years ago I was diagnosed with an aggressive form of breast cancer. I was given three treatments, all of which had been rigorously tested in randomized controlled trials and were included in a national clinical practice guideline: surgery to remove the tumor; a course of chemotherapy; and a drug called Herceptin (a monoclonal antibody against a surface protein being displayed by my cancer cells).

Evidence-based medicine offers powerful insights, but its principles have been naïvely over-applied in the current pandemic.

As the cancer physician Siddhartha Mukherjee describes in his book The Emperor of All Maladies (2010), before randomized controlled trials showed us what worked in different kinds of breast cancer, women were routinely subjected to mutilating operations and dangerous drugs by well-meaning doctors who assumed that “heroic” surgery would help save their lives but which actually contributed to their deaths. With a relatively gentle evidence-based care package, I made a full recovery and have been free of cancer for the past four years. (Incidentally, while going through treatment, I discovered that women who die from breast cancer have often been following quack cures and refusing conventional treatment, so I wrote a book with breast surgeon and fellow breast cancer survivor Liz O’Riordan on how evidence-based medicine saved our lives.)

But as its critical friends have pointed out, evidence-based medicine’s assumptions do not always hold. Treatment recommendations based on population averages derived from randomized trials do not suit every patient: individual characteristics must be taken into account. While proponents of evidence-based medicine acknowledged early that explanations from the basic sciences should precede and support empirical trials rather than be replaced by them, the injunction tends to be honored more in the breach than the observance. And as several observers have noted, “preferred” study designs (randomized trials, systematic reviews, and meta-analyses), methodological tick-lists, and risk-of-bias tools can be manipulated by vested interests, allowing them to brand questionable products and policies as “evidence-based.”

In practice, the neat simplicity of an intervention-on versus intervention-off experiment designed to produce a definitive—that is, statistically significant and widely generalizable—answer to a focused question is sometimes impossible. Examples that rarely lend themselves to such a design include upstream preventive public health interventions aimed at supporting widespread and sustained behavior change across an entire population (as opposed to testing the impact of a short-term behavior change in a select sample). In population-wide public health interventions such as diet, alcohol consumption, exercise, recreational drug use, or early-years development, we must not only persuade individuals to change their behavior but also change the environment to make such changes easier to make and sustain.

These system-level efforts are typically iterative, locally grown, and path-dependent, and they have an established methodology for rapid evaluation and adaptation. But because of the longstanding dominance of the evidence-based medicine paradigm, such designs have tended to be classified as a scientific compromise of inherently low methodological quality. While this has been recognized as a problem in public health practice for some time, the inadequacy of the prevailing paradigm has suddenly become mission-critical.

Notwithstanding the oft-repeated cliché that the choice of study design must reflect the type of question (randomized trials, for example, are the preferred design only for therapy questions), many senior scientists interpret evidence-based medicine’s hierarchy of evidence narrowly and uncompromisingly. A group convened by the Royal Society recently produced a narrative review on the use of face masks by the general public which incorporated a wide range of evidence from basic science, mathematical modeling, and policy studies. In response, one professor of epidemiology publicly announced:

That is not a piece of research. That is a non-systematic review of anecdotical [sic] and non-clinical studies. The evidence we need before we implement public interventions involving billions of people, must come ideally from randomised controlled trials at population level or at least from observational follow-up studies with comparison groups. This will allow us to quantify the positive and negative effects of wearing masks.

This comment, which assigns evidence from experimental trials a more exalted role in policy than the Nobel Prize–winning President of the Royal Society, reveals two unexamined assumptions. First, that the precise quantification of impact from every intervention is both desirable and possible. Second, that the best course of action—both scientifically and morally—is to do nothing until we have definitive findings from a large, comparative study that is unlikely ever to be done. On the contrary, medicine often can and does act more promptly when the need arises.

The principle of acting on partial or indirect evidence, for example, underpins the off-label use of potentially lifesaving medication for new diseases. This happened recently when the U.S. Food and Drug Administration (FDA) issued an emergency use authorization for hydroxychloroquine in patients with severe COVID-19 in certain limited circumstances. Notwithstanding the FDA’s own description of the evidence base for this indication as “anecdotal,” and reports of harm as well as possible benefit, strong arguments have been made for the cautious prescription of this drug for selected patients with careful monitoring until the results of ongoing trials are available. Nevertheless, criticisms that the FDA’s approach was “not evidence based” were quick to appear.

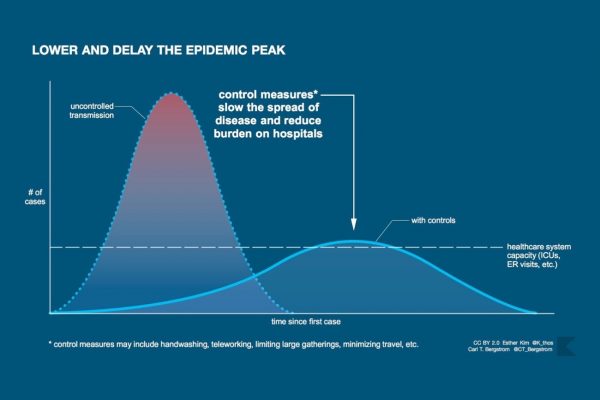

If evidence-based medicine is not the right scientific paradigm for this moment, what is? The framework of complex adaptive systems may be better suited to the analysis of fast-moving infectious diseases.

This paradigm proposes that precise quantification of particular cause-effect relationships in the real world is both impossible (because such relationships are not constant and cannot be meaningfully isolated) and unnecessary (because what matters is what emerges in a particular unique situation). In settings where multiple factors are interacting in dynamic and unpredictable ways, a complex systems approach emphasizes the value of naturalistic methods (where scientists observe and even participate in real-world phenomena, as in anthropological fieldwork) and rapid-cycle evaluation (that is, collecting data in a systematic but pragmatic way and feeding it back in a timely way to inform ongoing improvement). The logic of evidence-based medicine, in which scientists pursued the goals of certainty, predictability, and linear causality, remains useful in some circumstances—for example, the ongoing randomized controlled trials of treatments for COVID-19. But at a public health rather than individual patient level, we need to embrace other epistemic frameworks and use methods to study how best to cope with uncertainty, unpredictability, and non-linear causality.

The key scientific question about an intervention in a complex system is not “What is the effect size?” or “Is the effect statistically significant, controlling for all other variables?” but “Does this intervention contribute, along with numerous other factors, to a desired outcome in this case?” It is entirely plausible that, as with raising a child, multiple interventions might each contribute to an overall beneficial effect through heterogeneous effects on disparate causal pathways, even though none of these interventions individually would have a statistically significant impact on any predefined variable.

We need to embrace other frameworks better suited to uncertainty, unpredictability, and non-linear causality.

One everyday example of the use of complex causality thinking is the approach used by Dave Brailsford, who was appointed performance director of the British Cycling team in the mid 2000s. In a few short years, through changes in everything from the pitch of the saddles to the number of minutes’ recovery between training intervals, he brought the team from halfway down the world league table to the very top, winning eight of the fourteen gold medals in track and road cycling in the 2008 Beijing Olympics and setting nine Olympic records in London 2012. In Brailsford’s words, “The whole principle came from the idea that if you broke down everything you could think of that goes into riding a bike, and then improved it by 1 percent, you will get a significant increase when you put them all together.”

There is something to the idea. Marginal gains in a complex system come not just from linear improvements in individual factors but also from synergistic interaction between them, where a tiny improvement in (say) an athlete’s quality of sleep produces a larger improvement in her ability to sustain an endurance session and even larger improvements in her confidence and speed on the ground.

As Harry Rutter and colleagues explain in a recent article in The Lancet, “The Need for a Complex Systems Model of Evidence for Public Health,” a similar principle can be applied to public health interventions. Take, for example, the “walking school bus” in which children are escorted to school in a long crocodile, starting at the house of the farthest away and picking up the others on the way. (I learned of this example thanks to a lecture by Professor Harry Rutter.) Randomized controlled trials of such schemes have rarely demonstrated statistically significant impacts on predefined health-related outcomes. But a more holistic evaluation demonstrates benefits in all kinds of areas: small improvements in body mass index and fitness, but also extended geographies (the children get to know their own neighborhood better), more positive attitudes toward exercise from parents, parents commenting that children were less tired when they walked to and from school, and children reporting more enjoyment of exercise. Taken together, these marginal gains make the walking school bus an idea worth backing.

To elucidate such complex influences, we need research designs and methods that foreground dynamic interactions and emergence—most notably, in-depth, mixed-method case studies (primary research) and narrative reviews (secondary research) that tease out interconnections and incorporate an understanding of generative causality within the system as a whole.

Implementing new policy interventions in the absence of randomized trial evidence is neither back-to-front nor unrigorous.

In a recent paper David Ogilvie and colleagues argue that rather than considering these two contrasting paradigms as competing and mutually exclusive, they should be brought together. The authors depict randomized trials (what they call the “evidence-based practice pathway”) and natural experiments (the “practice-based evidence pathway”) not in a hierarchical relationship but in a complementary and recursive one. And they propose that “intervention studies should focus on reducing critical uncertainties, that non-randomized study designs should be embraced rather than tolerated and that a more nuanced approach to appraising the utility of diverse types of evidence is required.”

Under a practice-based evidence approach, implementing new policy interventions in the absence of randomized trial evidence is neither back-to-front nor unrigorous. In a fast-moving pandemic, the cost of inaction is counted in the grim mortality figures announced daily, but the costs and benefits—both financial and human—of different public health actions (lockdown, quarantining arrivals, digital contact tracing) are extremely hard to predict because of interdependencies and unintended consequences. In this context, many scientists, as well as the press and the public, are beginning to question whether evidence-based medicine’s linear, cause-and-effect reasoning and uncompromising hierarchy of evidence deserve to remain on the pedestal they have enjoyed for the past twenty-five years.

COVID-19 is the biggest comparative case study of “evidence-based” policymaking the world has ever known. National leaders everywhere face the same threat; their citizens will judge them on how quickly and effectively they contain it. Politicians have listened to different scientists telling different kinds of stories and making different kinds of assumptions. History will soon tell us whether evidence-based medicine’s tablets of stone have helped or hindered the public health response to COVID-19.

To reiterate the point I made earlier, there is no doubt that evidence-based medicine has offered and continues to offer powerful insights and still has an important place in the evaluation of therapies. But its principles have arguably been naïvely and indiscriminately over-applied in the current pandemic. The maxim of primum non nocere—first, do no harm—may entail that in ordinary medical practice we should not prescribe therapies until they are justified through a definitive experimental trial. But that narrow interpretation of the Hippocratic principle does not necessarily apply in all contexts, especially when hundreds are dying daily from a disease with no known treatment. On the contrary, it is imperative to try out, with rapid, pragmatic evaluation and iterative improvement cycles, policies that have a plausible chance of working. The definition of good medical and public health practice must be urgently updated.

Boston Review is nonprofit and relies on reader funding. To support work like this, please donate here.